Chunk Uploading and Downloading (Part 1)

May 21, 2021

Recently I’ve been practicing some system design challenges. One of the reason is for me to learn using tool other than Visio. Visio is amazing but it’s very costly. Currently I’m trying Excalidraw. It’s free, open-sourced, support collaboration, and the touch/stylus support is not bad.

This time I want to share about Uploading/Downloading Large File Size. There are a few challenges that needs to be solved

- Failed Request. Uploading large file can take a while. This can cause a timeout issue or interrupted. Hence I need to come up with a way so that the file can be uploaded partially, and resume the upload progress when issue happens

- Since the file can be uploaded partially, there may be cases where user will never complete the upload. Hence I need a way so that this orphan partially uploaded files can be cleaned up easily.

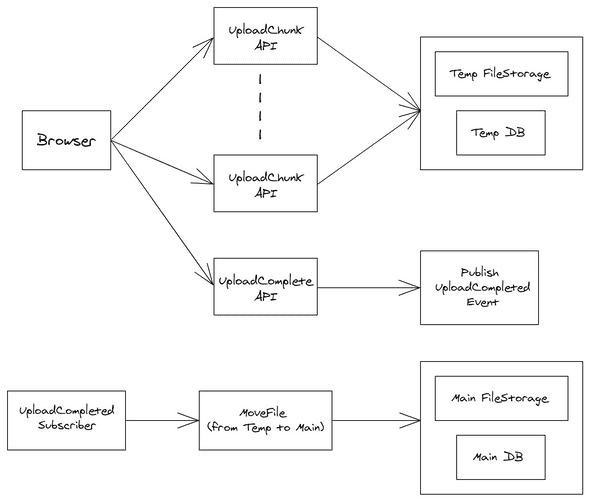

Here is the User-API flow that I come up with

Basically the flow is

- Client - Split the file into smaller chunks

- Client - Send the file chunks in parallel

- Server - Store the file chunks in a temporary storage

- Client - Inform server that all chunks have been sent

- Server - Move the file chunks from temporary storage to permanent/main storage

The file stored in the main storage is not aggregated. It is still in multiple chunks. The reason is because Download process has the same problem as the 1st Upload process, i.e. Failed Response due to interrupted network.

In the design, you’ll notice that the Moving of the File is done as a background process (Pub-Sub approach). There are a couple reasons why it’s better to do it this way

In most cases, when users upload a file, they are only interested that the upload is completed. they don’t care if the uploaded file is ready to be used or to be processed in the next step. Hence they shouldn’t need to wait for the FileMove process to be completed

Even in case where users need to know when the file is ready to be used, there will be many end-points that can receive the request, with load balancer at the front. This means that the Main storage may be located on a different machine or even region. But when uploading the chunk, we want to make it as fast as possible. This means we need to:

Store each chunk in a Temporary storage that’s the nearest to the end-point receiving the Chunk stream. This means some chunks may end up in different locations.

Main storage still needs to be centralized.

What this means is that the data transfer from Temporary Storage to Main storage may take some time. Hence it’s better to do it as a background process where user will be informed asynchronously when the process is completed.

This pretty much sums up the whole process. In the next few articles, I’m going to discuss the technical details of the process, such as

- Splitting the file and sending them to the server

- Make the process more resilient so that the Upload and Download can be resumed from a state before it is interrupted

- Simple server code that I use to test the feature (I am using Azure Function with C# and Azure Table/Blob/Queue storage)